How & When To Run Facebook A/B Tests

Facebook highly encourages A/B testing to help inform your campaign strategy, especially for creative changes. They have a specific tool for conducting these tests on their Business Manager platform. But is A/B testing right for your campaign? Learn more about the basics of A/B testing to find out.

What is an A/B test

An A/B test, or split testing, is a tool on Facebook that helps determine which strategy is best for your objectives. It works by creating a duplicate copy of your campaign, in which you change whatever variable you want to test. Facebook then divides your budget amongst the tested campaigns to equally, and randomly, split exposure between them; and then compares their performance on a cost per result basis. By striving for the most cost-efficient yet effective ad, you are optimizing a campaign to its fullest.

While we can already count on regular campaigns within Facebook to optimize for performance through machine learning, properly executed A/B testing will give you statistically proven results that can be attributed to a specific variable.

What to A/B test

You can let almost any aspect of your campaign battle it out, such as

- Creative: image variations, text variations, type of creative (video vs. carousel), Facebook best practices vs. current creative.

- Audience: demographics, interest targeting, regions, custom-based vs. interest-based

- Delivery optimization

- Landing pages

- Placement: newsfeed vs. stories, Instagram vs. Facebook Messenger

- Product sets

Why A/B test

A/B tests are quick to set up. Creating one is as easy as duplicating a campaign, ad set, or ad from your Ads Manager toolbar, and deciding which variable to evaluate. The tool then takes you to Experiments, where you’ll be guided to finalize the test. It is in the Experiments section where you will monitor, edit, and get results from your test. Like a marketing mad scientist.

A/B tests are reliable, since Facebook ensures the test audience will be evenly split and statistically comparable:

- there is no audience overlap

- users will only see one variation

- and the budget will be evenly split between the testing variables.

A/B tests usually do not include a holdout, or a control group, but can, if you select to measure conversion lift as opposed to cost per result.

How to A/B test

A good tip here is to come up with a hypothesis, or a question, you want to answer with your test. This hypothesis should be something measurable, to help produce results that will lead to a specific action. For example, let’s assume you are looking to improve ad performance and think your campaign should use persona-focused imagery instead of the creative that is currently running. Your hypothesis could then be: “I get a lower cost per landing page view when I use persona-based imagery in my ads.”

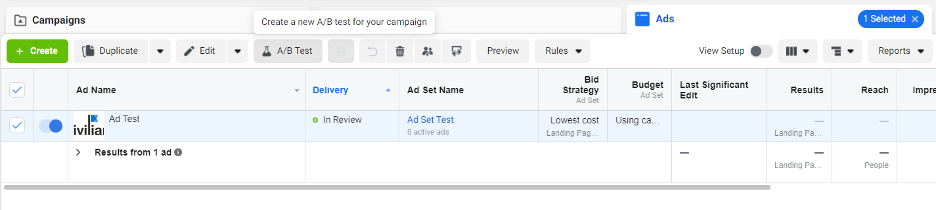

To start your A/B test, you must have a campaign that is published. It can be running or scheduled, but it needs to be published. Once you know what variable you want to test, select the level that contains the variable (campaign, ad set, or ad) and click the button for A/B testing. Depending on how wide your screen is, the button will be just a half-full flask, a half-full flask and “A/B Test” written next to it, or it may be hidden under “More.”

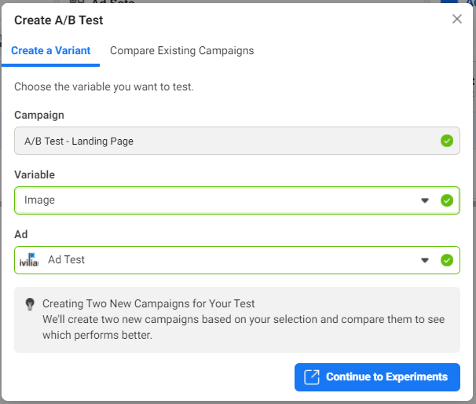

For our example above, you can select the ad you are currently running and click on the A/B testing button. A window will open where you will select the variable you want to test, in this case, image.

Click the blue button saying “Continue to the Experiments.” You will be taken to a Guided Creation window in the Experiment section, where you’ll be prompted to upload the image you want to test.

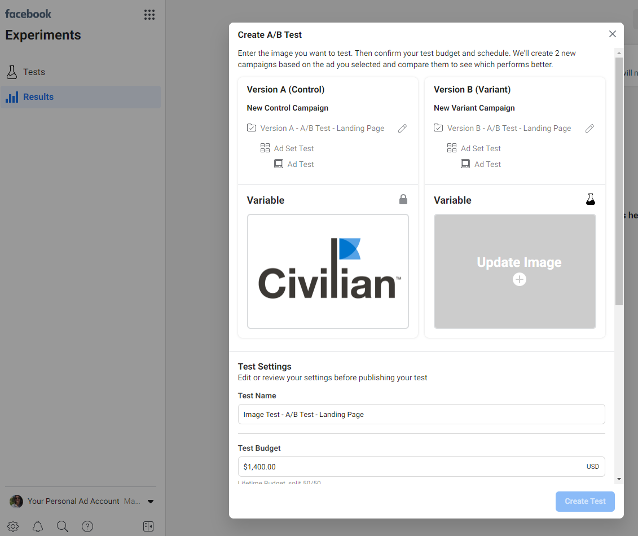

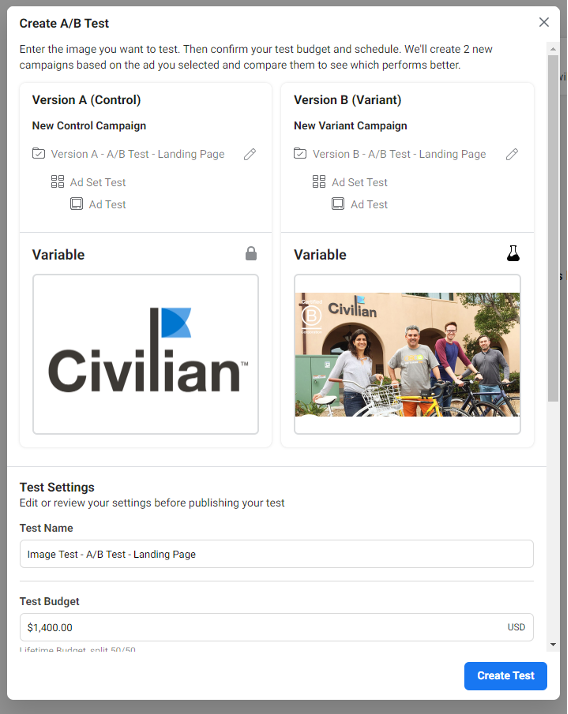

There, you will be able to upload the new variable (in this case, the new image.)

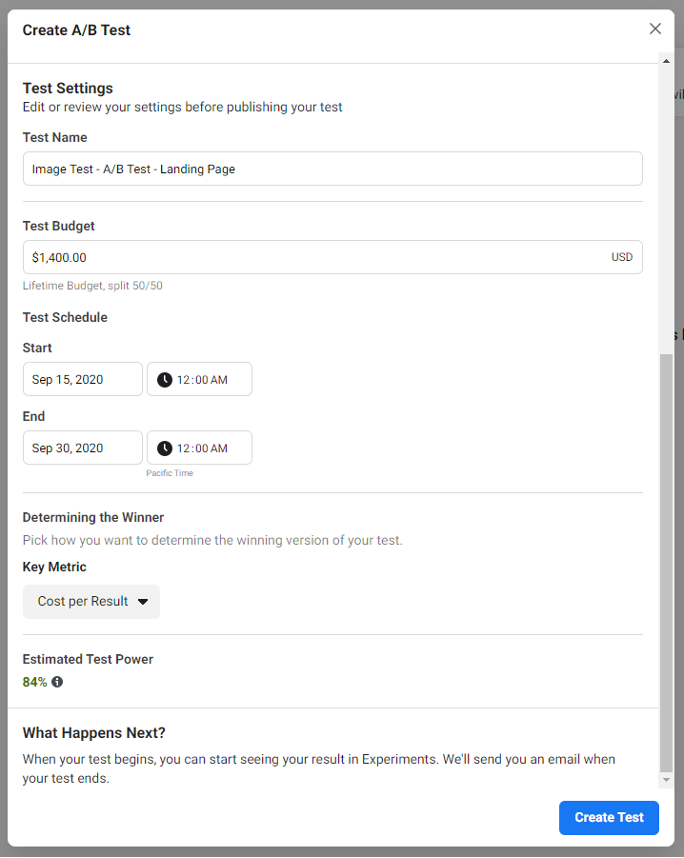

And then fill out the remaining Settings of your test:

- The ”Test Budget” section will be pre-filled with double the budget of your original campaign. In this example, the original campaign’s budget was $700, so your test budget would be $1,400. Your budget will determine your “Test Power” – or the likelihood that your test will yield a difference between the two variables. Test power above 80% is recommended. Here, we can see the budget selected yields a 84% Test Power

- Test schedule, or how long your experiment will run. Tests should usually run for a minimum of 4 and a maximum of 30 days, depending on your business vertical and objective; but your test might end earlier if you have enough results to determine a winner. Know that it takes around 24 hours for Facebook’s algorithm to optimize the delivery of your ads towards the objective you’ve selected , hence the recommended 4 days minimum – that allows enough time to make sure you’re not reading the results too early, and jumping to incorrect conclusions.

- Determining the winner – which metric you will use to determine who wins your battle. For our example, the metric is “Cost per result,” since the campaign is being optimized towards landing page views.

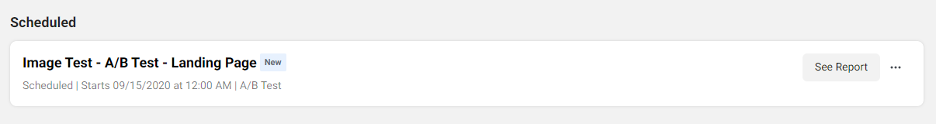

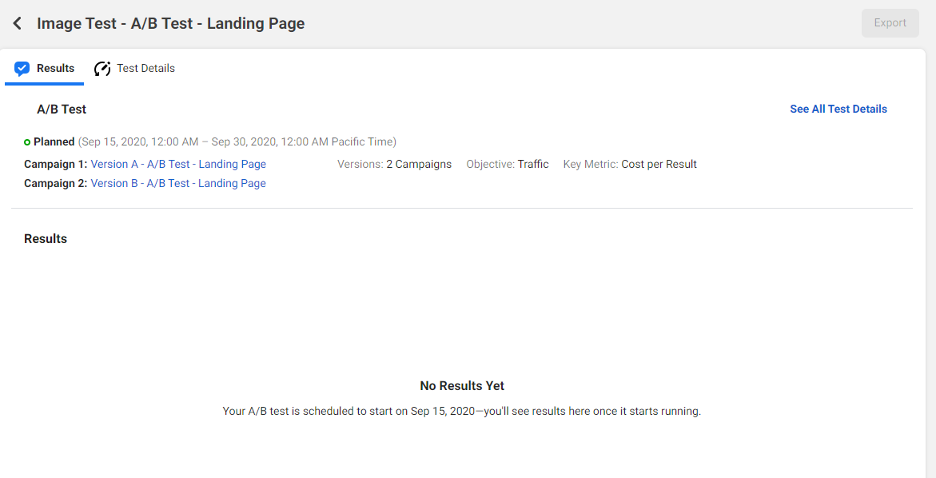

Click “Create Test” to finish. That will create a duplicate campaign on your Ads Manager, but you can track your test results & settings on the Experiments section, by clicking the test name.

Once your test is done, either by the running the full test term or reaching results of statistical significance, Facebook will elect a winner.

When to A/B test

You should A/B test when you are looking to improve your campaign and have a hypothesis that you’d like to explore in a controlled environment. A/B tests typically work best for long-term campaigns with budget to spare. Since the test will provide a “winner,” the results help inform the campaign strategy moving forward. This process is no use if you have a limited-term campaign or very little budget. It also works best with minimal variations so that performance can be clearly attributed to a specific change, and not to multiple variables.

When NOT to A/B test

You should reconsider running an A/B test if you have too many variables you are trying to test. Mixing twos variables in a test makes it harder to figure out where your result came from. Suppose you are running a test to find out whether persona-specific creative performs better than scenic-specific creative. If you use different copy and calls-to-action in each image variation, you will not be able to attribute the test result to the creative type.

You should also avoid testing minor changes to ad copy or imagery. A good rule of thumb is to test ads that are different conceptually, for results that will impact your campaign’s future. Different copy and small image variations can run against each other once your campaign strategy is established.

You should not run an A/B test to an audience when there is another campaign targeting that audience running at the same time. This will mess with your test results, and they will not be statistically significant.

Final thoughts: machine learning and A/B testing

Facebook A/B testing is completely different from letting your campaign optimize to the best performing ad. Advertisers, looking to save budget and time, usually run two or more creative variations to allow Facebook to optimize, via its machine learning mechanisms, for the best performing ad, audience, and placement. Once the algorithm realizes which ads in which placements are performing better, most of the budget will be spent running that variation, as much as needed to achieve the optimization goal chosen for your campaign.

Even though A/B testing is not an efficient way to run campaigns, it can be a great tool for advertisers who can, and want to, test out their hypothesis in a controlled environment. It can lead to findings that will help grow your audience, improve your results, and better your strategy. You just need to be patient and careful when conducting them, to make the most of what they have to offer.

May the best ad win.